D

DeliAli

Member

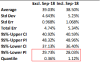

Wow the pass list was only 72 out of 274 which equates to just 26.3%. This is very disappointing

Thank you Uroš,I would wait until the 3rd of January and then email them again. Probably your email was seen, yet the answer needs input from more people who are most likely out of the office.

Dear xxx,

Thank you for your email.

The SA3 Examiner Report details that the performance for this paper was lower than has been seen for a number of years and that “candidates appeared underprepared” .

More information about the aims of this subject, how it is marked and the student performance for the September 2018 exam is detailed in the SA3 Examiner Report, which can be found on the website.

I hope this provides you with the information you require.

Kind regards,

xxx

Senior Assessment Executive